- October 23~27, 2022

- Kyoto, Japan

- Robotics

IROS 2022

The 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022)

The 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022) will be held on October 23~27, 2022 in The Kyoto International Conference Center, Kyoto, Japan. The IROS is one of the largest and most impacting robotics research conferences worldwide. It provides an international forum for the international robotics research community to explore the frontier of science and technology in intelligent robots and smart machines. The theme of IROS 2022 is “Embodied AI for a Symbiotic Society”. Sony look forward to this year's exciting sponsorship and exhibition opportunities, featuring a variety of ways to connect with participants in person.

Recruiting information for IROS-2022

We look forward to highly motivated individuals applying to Sony so that we can work together to fill the world with emotion and pioneer the future with dreams and curiosity. Join us and be part of a diverse, innovative, creative, and original team to inspire the world.

For Sony AI positions, please see https://ai.sony/joinus/jobroles/.

*The special job offer for IROS-2022 has closed. Thank you for many applications.

Industrial Forum

- Date & Time

- October 24 (Monday) 14:00-17:00 (JST)

- Venue

- Main Hall

- Event Type

- Presentation

Enhancing games with cutting-edge AI to unlock new possibilities for game developers and players.

Technologies & Business use case

Technology 01 Enhancing games with cutting-edge AI to unlock new possibilities for game developers and players.

We are evolving Game-AI beyond rule-based systems by using deep reinforcement learning to train robust and challenging AI agents in gaming ecosystems. This technology enables game developers to design and deliver richer experiences for players. The recent demonstration of Gran Turismo Sophy™, a trained AI that beat world champions in the PlayStation™ game Gran Turismo™ SPORT, embodies the excitement and possibilities that emerge when modern AI is deployed in a rich gaming environment. As AI technology continues to evolve and mature, we believe it will help spark the imagination and creativity of game designers and players alike.

Can an AI outrace the best human Gran Turismo drivers in the world? Meet Gran Turismo Sophy and find out how the teams at Sony AI, Polyphony Digital Inc., and Sony Interactive Entertainment worked together to create this breakthrough technology. Gran Turismo Sophy is a groundbreaking achievement for AI, but there's more: it demonstrates the power of AI to deliver new gaming and entertainment experiences.

Technology 02 Mobile Manipulator

We are developing manipulation technologies to handle various objects, including flexible and fragile objects, in a variety of environments. In order to handle flexible and fragile objects, we are developing unknown object grasping and control technology that can detect slip in any direction by mathematical modeling of tactile sense, proximity control technology that can align fingers to the appropriate surface of an object, and high-speed trajectory planning technology that can quickly find a trajectory that avoids collision with the environment. We have developed technologies to enable robots to be used in various environments, and are aiming to apply them to service robots.

Technology 03 User-centered Manipulator

In recent years, collaborative robot arms have emerged as robots that can operate in close contact with people and have come to be used in a variety of environments as well as for a variety of objects. Conventionally, in order to utilize a robot arm, a robot specialist or a system integrator constructs a system which includes a robot, the operation of which is complex and generally cannot be easily operated by untrained users. We are developing a user-centered manipulator, aiming for an era in which everyone can freely use robots. In order to get closer to people, we are developing a robot arm technology that allows the robot to respond to any part of the robot arm touched by a person or environment without exerting more force than necessary.

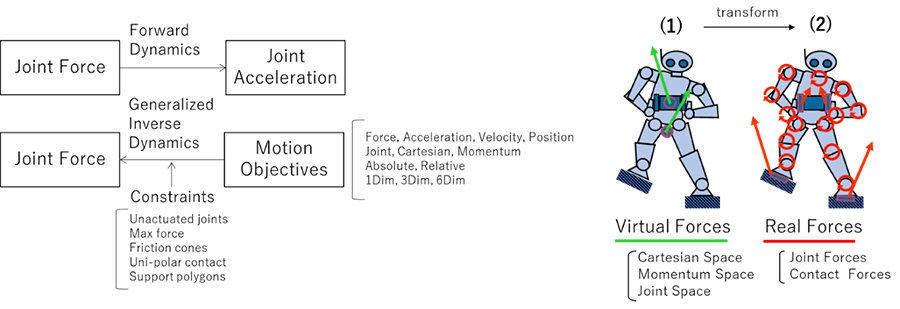

Generalized Inverse Dynamics (GID) [1]

In order to realize the control of the whole body cooperative motion of a robot, it is necessary to model the whole mechanism mathematically and find the driving force of each joint to realize the motion objective. The arithmetic technology for this is GID.

Figure 1 : Generalized Inverse Dynamics (GID)

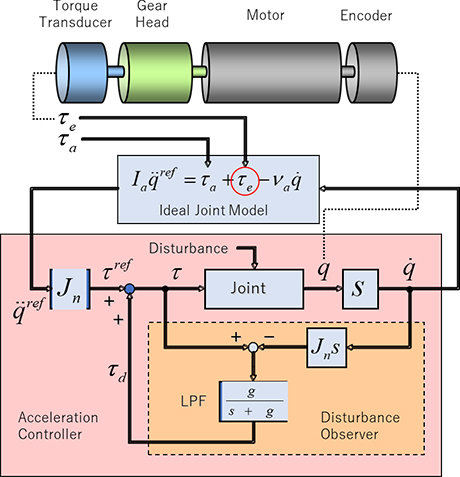

Ideal joint unit [2]

To achieve GID, it is necessary to cancel the actual friction and inertia of each joint and operate it in a condition close to the mathematically ideal model described above. The ideal joint unit we developed can achieve the inertia and viscosity of each joint just as we set the parameters of virtual inertia and virtual viscosity.

Figure 2 : Ideal joint unit

Wheelchair manipulator

As an example of the use of manipulators by the general users, we are exhibiting a wheelchair manipulator this time. The manipulator is small and features safe and flexible operation. This is being carried out in collaboration between the R&D Center Tokyo Laboratory 24, China laboratory and Shanghai Jiao Tong University.

Figure 3 : Wheelchair manipulator

[1] K. Nagasaka, A. Miyamoto, M. Nagano, H. Shirado, T. Fukushima, M. Fujita, “Motion Control of a Virtual Humanoid that can Perform Real Physical Interactions with a Human”, 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

[2] K. Nagasaka, Y. Kawanami, S. Shimizu, T. Kito, T. Tsuboi, A. Miyamoto, T. Fukushima, H. Shimomura, “Whole-body Cooperative Force Control for a Two-Armed and Two-Wheeled Mobile Robot Using Generalized Inverse Dynamics and Idealized Joint Units”, 2010 IEEE International Conference on Robotics and Automation (ICRA)

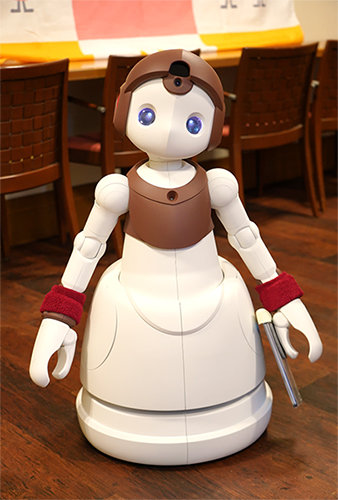

Technology 04 Nursing Robot

With the impact of the coronavirus pandemic and the decline in the working population, there are many issues for special nursing homes in Japan. The main issue is age related cognitive decline and decrease in QOL of facility users.

In order to contribute to this field, we have developed a child-type nursing robot that can attend to users and provide individual applications for each user in a shared living room. In particular, we have developed applications, such as "singing songs together," "temperature testing," and "telephone assistance with family" to match needs and technologies. By inputting the schedule of the robot, these individual applications can be executed autonomously to the users in the living room according to the program.

The robot was designed to be a cute child figure and voice with elements that will never be forgotten and connect with important memories, even for users with dementia. We then combine this robot with our autonomous motion technology to generate highly acceptable interactions from people with dementia. This incorporates the essence of the care technique called “Humanitude” which is based on visual and vocal communication.

A demonstration experiment using this robot system was conducted at Yomiuri Land Flower House, a special nursing home for the elderly, at the end of last year. The results showed that the users with dementia could recognize the robot they had seen for the first time, talk to it, and sing along with it. The results were good enough to please the users and surprise the staff. In the future, we would like to further conduct value verification and accelerate consideration toward commercialization by operating the system in nursing homes over a long period of time.

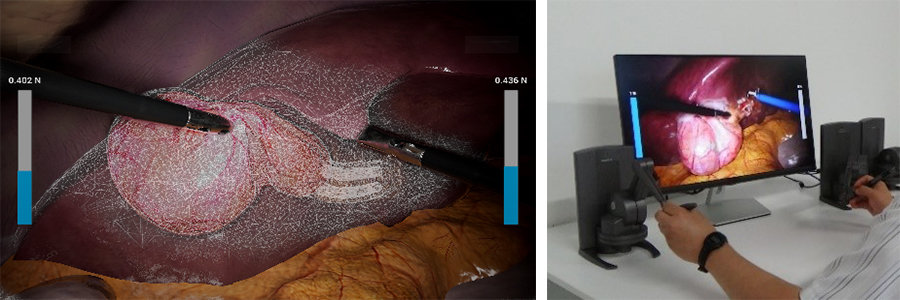

Technology 05 Physically-Photo-Realistic Surgical Simulator

Expectation to VR surgical training is rapidly growing, but existing technologies have limited visual quality and don't provide physically accurate haptic feeling that it is crucial for training. This simulator can simulate realistic organ deformations by real-time physics-based calculation and is able to provide precise haptic feedback. Surgeons can demonstrate surgeries in virtual environment with photo-realistic visual under surgical procedure.

Disclaimer: This video contains computer graphic of surgical simulation and may not be suitable for all audiences.

If you are easily offended, you should not view it.

Technology 06 Camera Robot

"We want to drive robot development for everyone"

Sony's robotics platform was born from this idea. We will provide all the fundamental technology that we have fostered over many years, such as autonomous navigation, human tracking, control of multiple robots, operational tools and system state detection. These tools will help you to build basic functionality that requires the most development stress.

As one of the applications of our platform, we are developing an autonomous camera robot for creators. This robot can achieve new camerawork by filming and moving in tandem with the artist's movements.

Technology 07 Super Low Latency Full Body Pose Control of a Small Bipedal Robot Using Vision Inputs

The small bipedal robot captures the user's pose and reflects the movement as it is. The time required to reach the mechanism from the vision sensor through to the user's estimated pose without wearing any sensors on the user‘s body has been shortened to 55 msec. As a result, the robot expresses a movement that seems to stick/adhere to the user's body. By shortening latency of the pose tracking using the pose recognition from the image frame and the Event-based Vision Sensor (EVS), which is meant to be the differential input, the robot is able to react to the user's intention. The improved time resolution from the EVS has enabled smooth movement. In addition, the high-speed robot feedback control system expresses the user's intention without falling even when receiving an input of a posture with a strong momentum. Furthermore, even if it makes contact with an obstacle, it will operate flexibly due to the torque control.

※The Event-based Vision Sensor is “IMX636” developed by Sony Semiconductor Solutions Corporation

Click here for details

Technology 08 Sensing Solution University Collaboration Program (SSUP)

SSUP is built around "Sensing" and "Collaboration".

Our goal with the SSUP is to create innovative sensing solutions through open collaboration with a range of partners in higher education. In doing so, we aim to create a world of surprise and excitement.

The program offers real collaboration opportunities for a network of partners from university labs to teaching facilities. It encourages and supports research collaboration in the area of sensing solutions, and promotes innovation and education.

On this site, we present projects and case examples from the program.

Publications

Publication 01 Quantifying Changes in Kinematic Behavior of a Human-Exoskeleton Interactive System

- Authors

- Peter Stone (Sony AI)

- Abstract

- While human-robot interaction studies are becoming more common, quantification of the effects of repeated interaction with an exoskeleton remains unexplored. We draw upon existing literature in human skill assessment and present extrinsic and intrinsic performance metrics that quantify how the human-exoskeleton system's behavior changes over time. Specifically, in this paper, we present a new performance metric that provides insight into the system's kinematics associated with ‘successful' movements resulting in a richer characterization of changes in the system's behavior. A human subject study is carried out wherein participants learn to play a challenging and dynamic reaching game over multiple attempts, while donning an upper-body exoskeleton. The results demonstrate that repeated practice results in learning over time as identified through the improvement of extrinsic performance. Changes in the newly developed kinematics-based measure further illuminate how the participant's intrinsic behavior is altered over the training period. Thus, we are able to quantify the changes in the human-exoskeleton system's behavior observed in relation with learning.

Other Conferences

-

- August 8~11, 2022

- Vancouver, Canada

- Computer Vision

SIGGRAPH 2022

The Premier Conference & Exhibition on Computer Graphics & Interactive Techniques

View our activities -

- July 23~29, 2022

- Vienna, Austria

- ML/General AI

IJCAI 2022

IJCAI 2022, the 31st International Joint Conference on Artificial Intelligence

View our activities